Motion capture (mocap) technology records real-world movement as a 3D digital model, which can be used by animation software to create new characters and scenes.

Animators have always been challenged by reproducing realistic movement and, in the analog age, often traced over actual movie footage to give the characters lifelike personalities – an approach used in the 1937 Disney classic Snow White and the Seven Dwarfs.

In the digital age, a number of techniques have evolved to allow incredibly detailed models of physical movement to be captured, stored, and manipulated. Certain types of mocap can also record the detailed movements and expressions on an actor’s face, as epitomized by Andy Serkis’s famous portrayal of Gollum in The Lord of the Rings trilogy. In such instances, the term “performance capture” is often used instead.

The last 20 years have seen an explosion in the use of mocap, ranging from enormously expensive real-time systems used by blockbuster movies and video games to simple apps on smartphones used by sports coaches and hobby animators.

In this article, we’ll look at how and where mocap underpins a growing number of applications, and we’ll go over the technologies for capturing motion, ranging from the classic “marker-based” solutions to emerging “markerless” approaches. We’ll also look at how mocap data can be stored and shared with applications from kinetic analysis to graphics software, as well as share a quick overview of how mocap data is transformed into compelling animations.

Multiple Applications

The biggest application of mocap is undoubtedly in entertainment. Classic film characters including Caesar from the Planet of the Apes franchise, Smaug the dragon from The Hobbit trilogy, Neytiri from Avatar, and many more have only been made possible by motion capture, allowing a level of detail and realism that couldn’t be animated by computer-generated imagery (CGI) alone.

Blockbuster video games including Halo and Call of Duty also depend entirely on mocap to bring their main characters to life, and the parent franchises invest heavily in a wide range of related technology to immerse their users in ever more realistic action.

Much featured in news headlines, Swedish supergroup ABBA have returned using mocap to capture their performances and recreate their stage personas from 40 years ago as virtual “ABBAtars”. It’s widely expected that this technology will be adopted by many other top acts in coming years.

However, we shouldn’t lose sight of the many more practical applications, too, including the analysis of top athletes to improve their performance or assisting medical professionals in studying and diagnosing body kinetics issues. Motion capture also provides realism to scenarios played out in virtual training environments for military and civil emergency response teams.

With such a growing range of applications, it’s not surprising that the underlying mocap technologies continue to evolve, offering more accurate motion capture and at lower costs. These include systems that depend on tracking optical markers, such as the classic “ping-ball suits” worn by mocap actors, to modern tools that derive 3D models from video alone.

Next, let’s take a closer look at mocap technologies.

Marker-Based Mocap

The most common and known mocap technique uses optical “markers” attached to key points on an actor (or object) that are tracked using multiple cameras. These markers can either be “passive” and reflect light or “active” and emit their own light. The recorded pattern of moving markers is then reconstructed as a 3D model using sophisticated software. This typically depends on articulating a digital “skeleton” that connects virtual copies of these physical markers.

The more cameras that are used, the more accurate the motion capture, although at the expense of significantly increased computer processing. Software also has to take care of cases where markers are concealed or covered up due to certain movements.

Markers have to be positioned carefully, so actors often wear body suits with these already attached. Very small markers (just 3 mm in size) can be attached directly to an actor’s face to reconstruct facial movements, all of which takes time and effort. In both cases, there’s an optimal number of markers to highlight the motion required but not so many to overcomplicate the required processing.

Passive markers only reflect light and ideally require a consistently lit, artificial environment. As there’s no way to tell one marker from another, they can be hard to track when movement is complex, fast, or involves multiple characters. In such cases, the markers can be replaced by various types of active markers which emit their own light from LEDs. The light can be modulated in such a way that each marker can be uniquely identified. Some also operate outside of the visible spectrum (e.g. using infrared light), which interferes less with performances. In all cases, they operate best with dedicated stage sets and studios where multiple cameras are pre-installed and calibrated with dedicated lighting and computer facilities.

Another approach is to use non-optical markers, which are sensors with built-in inertia or position measurement capable of independently tracking movement, inclination, and rotation in 3D. These systems (e.g. by Rokoko) lower costs because they don’t need multi-camera setups and can also be used more easily without dedicated stage sets (e.g. tracking an actor negotiating an assault course). They are, however, less accurate and can’t track facial expressions.

Markerless Mocap

Marker-based systems, described above, suffer from the drawback that they’re often intrusive to the actors or objects being tracked and can be expensive to use. To address these issues, two related technologies are emerging to capture motion without the use of attached markers of any sort. These “markerless” solutions detect and record movement remotely, leaving the target actors or objects free of any additional paraphernalia.

The first is the broad area of “RGB-D”, which combines normal RGB video with depth information, usually derived from infrared “smart lighting” or lidar.

The classic commercial example of this technology is Microsoft’s Kinect, which has transformed 3D capture. In its most simple form, we see such solutions being used for detailed static 3D object scanning, but combined with the right software and multiple “cameras”, it can also be used to track and model real-time movements with surprising accuracy.

Such solutions are especially well suited to biomechanical assessment, which is advanced by companies such as Mar Systems, or for simple, cost-effective animation such as the solutions offered by Rush Motion.

And for the second related technology, as video processing and artificial intelligence continue to advance, even the depth data can be derived semi-automatically, allowing markerless mocap to be achieved using ordinary video cameras. The most advanced of these, such as that offered by Move Ai, can track multiple people or actors moving at high speeds (e.g. playing team sports).

Markerless solutions can also capture facial expressions using video alone, although they do still need a camera to be attached to a framework in front of an actor’s face. Solutions such as MocapX are smart enough to work on an iPhone and have radically reduced the cost compared to traditional approaches.

Of course, technologies can be mixed and matched. For example, an inertial marker-based suit can be combined with a phone-based markerless system to capture facial expressions in order to bring real-time avatars to life, as first demonstrated by Kite and Light back in 2018.

Converting Mocap Data

Capturing mocap data is only the first step in creating animations with realistic movement.

File Formats

Mocap data is exchanged and shared with many different software systems, including animation tools (e.g. Maya, Cinema 4D), video game platforms (e.g. Unity, Unreal), and kinetic analysis packages. There are also multiple online libraries that have standard ways to share free or commercial pre-recorded movements. Motion capture systems generally store data in proprietary formats, but they also support export to others for easier interchange.

One of the most popular is C3D (Coordinate 3D), which stores three-dimensional data in a compact binary structure and is a standard format for biomechanics data storage. It’s used equally for clinical and more creative entertainment applications.

Other formats include Adobe’s FBX (Filmbox) file format, which is mainly used for game development and animation. BVH files were devised to store data for characters in Second Life and various 3D video games and still remains popular for more simple applications.

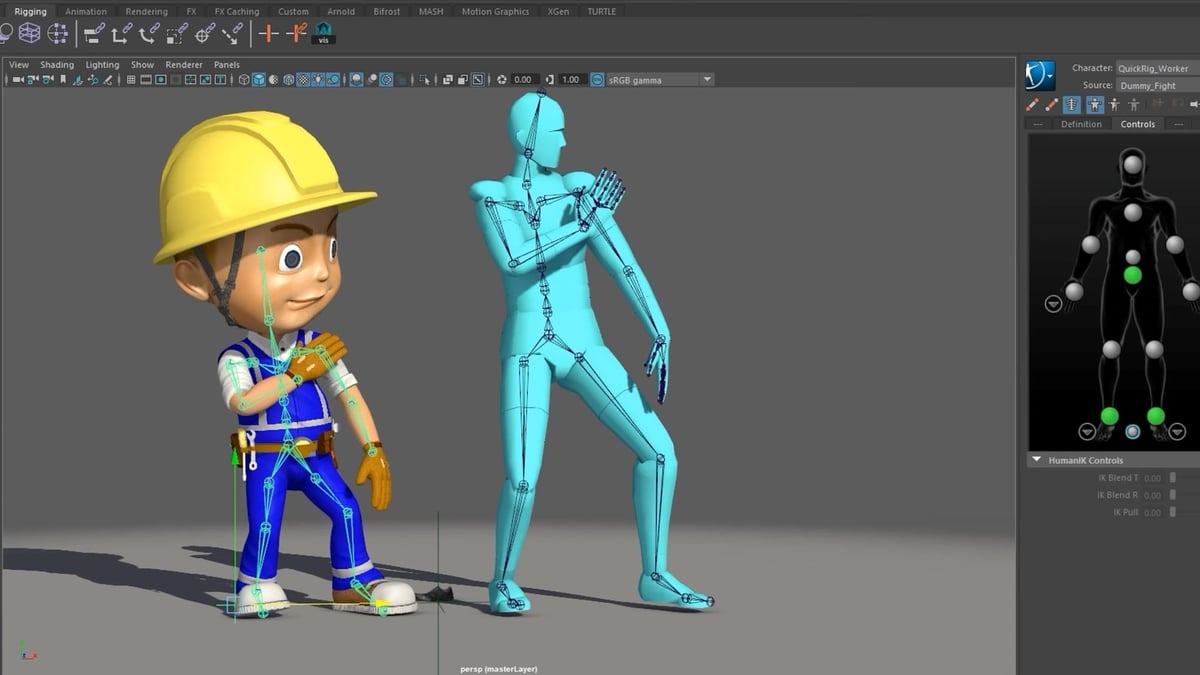

For video, film, and game applications, the most important step is to take 3D mocap data (often in the form of a “skeleton”) in an appropriate file format and map this to another model “rigged” to represent the target 3D character in a process known as retargeting.

The Process

Most – if not all – of the major 3D modeling tools, as well as those for video and gaming, have dedicated functionality to make both retargeting and the editing of mocap data easy, whether for movement of overall body shapes or more detailed situations like finger movements or facial expressions. In this way, a human-scaled character played, for example, by Andy Serkis can be manipulated to the shape and expressions of Gollum, or Benedict Cumberbatch can be transformed into Smaug.

In the early days, processing mocap data and rendering the retargeted animations required offline processing and took a lot of time. With vastly improved processing speeds, the growing trend is for real-time processing not just of the motion capture itself but also to render (drafts of) the target animations. This allows actors and directors immediate feedback to help capture the desired performances.

There’s still a great deal of skill and creative artistic input required to make final, compelling animations, but they can be built on a firm foundation of the mocap data itself, set in whatever environment is appropriate for the desired end result.

The role of mocap continues to grow across multiple industries, and we’re likely to see this continue its application as costs and ease of use improve even further.

License: The text of "3D Motion Capture (Mocap) Animation: Simply Explained" by All3DP is licensed under a Creative Commons Attribution 4.0 International License.